介紹:

DRBD為Linux上分散式儲存系統,除系統核心,另包含數個使用者空間管理程式及shell scripts,通常用於高可用性(High Availability)叢集。為GPL2自由軟體。

使用硬體:

SuperMicro 6026TT-HIBXF

- Intel Xeon Quad-Core x2

- 24GB DDR3 RAM

環境建置:

VMware vSphere ESX 4.1 update1

- CentOS 5.5 x86_64(2.6.18-194.el5)

- drbd 8.3.8-1.centos.x86_64

網路環境:

建置SOP:

DRBD為Linux上分散式儲存系統,除系統核心,另包含數個使用者空間管理程式及shell scripts,通常用於高可用性(High Availability)叢集。為GPL2自由軟體。

使用硬體:

SuperMicro 6026TT-HIBXF

- Intel Xeon Quad-Core x2

- 24GB DDR3 RAM

環境建置:

VMware vSphere ESX 4.1 update1

- CentOS 5.5 x86_64(2.6.18-194.el5)

- drbd 8.3.8-1.centos.x86_64

網路環境:

建置SOP:

- 於兩node安裝CentOS 5.5,注意需分割一獨立partition供DRBD使用。

- 修改/etc/hosts及/etc/sysconfig/network的hostname,或正確設定DNS。

- 安裝DRBD相關套件(兩node都必須安裝)

#yum install drbd83 kmod-drbd83 - 修改DRBD設定檔/etc/drbd.conf,以下為範例參考。

global {

minor-count 64;

usage-count yes;

}

common {

syncer { rate 1000M; }

}

resource drbdha { #drbdha為resource名稱

protocol C; #指定同步方式

handlers {

pri-on-incon-degr "/usr/lib/drbd/notify-pri-on-incon-degr.sh; /usr/lib/drbd/notify-emergency-reboot.sh; echo b > /proc/sysrq-trigger ; reboot -f";

pri-lost-after-sb "/usr/lib/drbd/notify-pri-lost-after-sb.sh; /usr/lib/drbd/notify-emergency-reboot.sh; echo b > /proc/sysrq-trigger ; reboot -f";

local-io-error "/usr/lib/drbd/notify-local-io-error.sh; /usr/lib/drbd/notify-emergency-shutdown.sh; echo o > /proc/sysrq-trigger ; halt -f";

pri-lost "/usr/lib/drbd/notify-pri-lost.sh; /usr/lib/drbd/notify-emergency-reboot.sh; echo b > /proc/sysrq-trigger ; reboot -f";

split-brain "/usr/lib/drbd/notify-split-brain.sh root";

out-of-sync "/usr/lib/drbd/notify-out-of-sync.sh root";

}

startup {

wfc-timeout 60;

degr-wfc-timeout 120;

outdated-wfc-timeout 2;

}

disk {

on-io-error detach;

fencing resource-only;

}

syncer {

rate 1000M;

}

on drbd-node1.ngc {

device /dev/drbd0;

disk /dev/hdb1; #drbd分割區

address 192.168.104.161:7788;

meta-disk internal;

}

on drbd-node2.ngc {

device /dev/drbd0;

disk /dev/hdb1; #drbd分割區

address 192.168.104.162:7788;

meta-disk internal;

}

}

- 進行權限調整。

#chgrp haclient /sbin/drbdsetup

#chmod o-x /sbin/drbdsetup

#chgrp haclient /sbin/drbdmeta

#chmod o-x /sbin/drbdmeta - 載入DRBD模組,並進行確認。

#modprobe drbd //載入 drbd 模組

#lsmod|grep drbd //確認 drbd 模組是否載入

drbd 228528 0 - 建立Resource。

#dd if=/dev/zero of=/dev/hdb1 bs=1M count=100

//把一些資料塞到hdb,否則create-md時可能會出錯

#drbdadm create-md drbdha //建立 drbd resource - 啟動DRBD Service

#service drbd start //啟動 drbd 服務

#chkconfig drbd on //設定 drbd 開機時自動啟動 - 指令查看是否啟動成功

#service drbd status

drbd driver loaded OK; device status:

version: 8.3.8 (api:88/proto:86-94)

GIT-hash: d78846e52224fd00562f7c225bcc25b2d422321d build by mockbuild@builder10.centos.org, 2010-06-04 08:04:16

m:res cs ro ds p mounted fstype

0:drbdha Connected Secondary/Secondary Inconsistent/Inconsistent C - 初始化兩台主機資料(僅於primary node執行)

#drbdadm -- --overwrite-data-of-peer primary ha

#service drbd status

drbd driver loaded OK; device status:

version: 8.3.8 (api:88/proto:86-94)

GIT-hash: d78846e52224fd00562f7c225bcc25b2d422321d build by mockbuild@builder10.centos.org, 2010-06-04 08:04:16

m:res cs ro ds p mounted fstype

... sync'ed: 5.7% (9660/10236)M delay_probe:

0:ha SyncSource Primary/Secondary UpToDate/Inconsistent C - 格式化後即可正常掛載使用(僅於primary node執行)

[root@drbd-node1 ~]# mkfs -t ext3 /dev/drbd0

mke2fs 1.39 (29-May-2006)

Filesystem label=

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

6553600 inodes, 13106615 blocks

655330 blocks (5.00%) reserved for the super user

First data block=0

Maximum filesystem blocks=4294967296

400 block groups

32768 blocks per group, 32768 fragments per group

16384 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208,

4096000, 7962624, 11239424

Writing inode tables: done

Creating journal (32768 blocks): done

Writing superblocks and filesystem accounting information: done

This filesystem will be automatically checked every 34 mounts or

180 days, whichever comes first. Use tune2fs -c or -i to override.

[root@drbd-node1 ~]# mount /dev/drbd0 /drbd

[root@drbd-node1 ~]# df

/dev/mapper/VolGroup00-LogVol00

120266820 2007008 112051916 2% /

/dev/sda1 101086 16155 79712 17% /boot

tmpfs 384160 0 384160 0% /dev/shm

/dev/drbd0 51603800 184272 48798208 1% /drbd

其他注意事項:

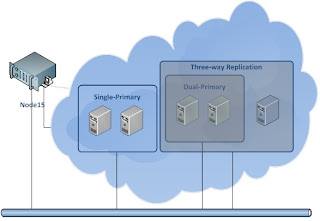

- 單一DRBD partition僅能於primary node掛載(除非使用dual primary模式)。

- 一般single primary需搭配service才可使用heartbeat做出high availability。

- 兩台可隨時切換primary或secondary角色。

- 若欲啟用dual primary模式,須於/etc/drbd.conf的net區塊加入” allow-two-primaries;”、startup區塊加入” become-primary-on both;”字句,並使用OCFS2、GFS等cluster用檔案格式進行drbd partition格式化(待測)。

0 Comments:

張貼留言